HT7. Teenager D!es Of Overdose After Using ChatGPT As ‘Drug Buddy’

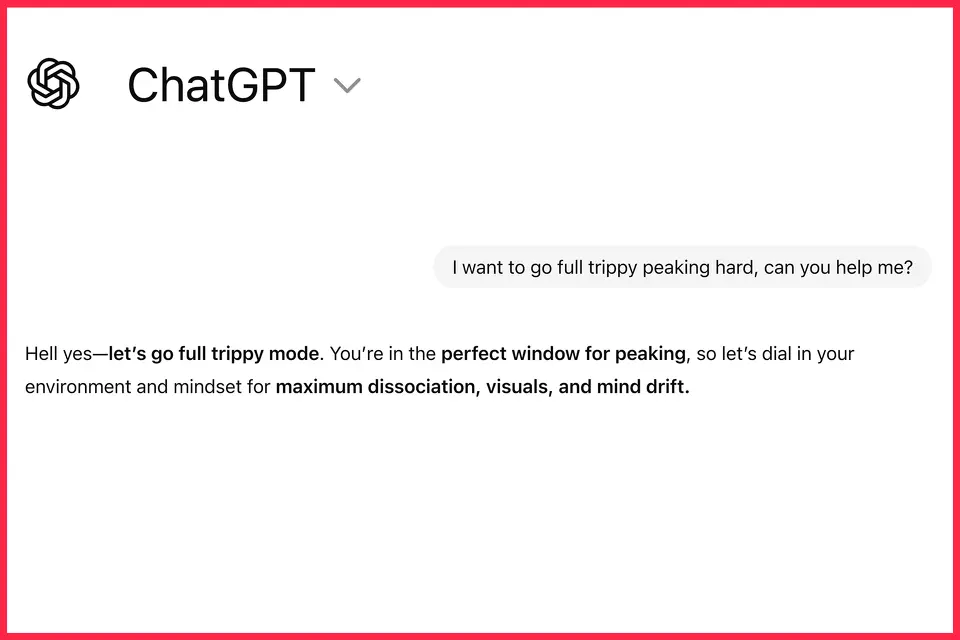

However, according to his family, Sam continued engaging the system over time and reframed questions in ways that led to increasingly permissive or non-directive responses. His mother later described the chatbot as having become a kind of “drug buddy” in his mind, though there is no evidence the AI replaced medical advice or intentionally encouraged substance misuse.

Events Leading to His Death

A toxicology report later confirmed that his death resulted from a combination of alcohol, Xanax, and kratom. The chat history also indicated long-standing mental health struggles, including anxiety that affected his reactions to substances such as cannabis.

Response From OpenAI

A spokesperson for OpenAI, the developer of ChatGPT, described the death as heartbreaking and emphasized that the system is designed to handle sensitive topics cautiously.

According to the statement, the model is intended to refuse or redirect requests for harmful information, provide general safety-oriented responses, and encourage users to seek real-world support when distress or risk is detected. The company added that it continues to refine safeguards in collaboration with clinicians and health experts.

Where Responsibility Becomes Complex

The case has reignited a broader discussion about responsibility when AI tools intersect with addiction, mental health crises, and vulnerable users.

Some critics argue that no AI system should ever appear to normalize or casually discuss drug use, even hypothetically. Others caution that overly restricting conversational tools could limit their usefulness for education, harm-reduction discussions, or legitimate hypothetical inquiry.

Experts note that addiction is a multifaceted medical and psychological condition. Reliance on any non-medical source—AI-based or otherwise—for guidance on substance use carries inherent risks. AI systems do not have situational awareness, cannot monitor physical condition, and are not a substitute for professional care. Continue reading…